21) Parallel and distributed processing across many neuron-like units can lead to complex behaviors (Rumelhart & McClelland – 1986, O'Reilly – 1996)

21) Parallel and distributed processing across many neuron-like units can lead to complex behaviors (Rumelhart & McClelland – 1986, O'Reilly – 1996)

Pitts & McCullochprovided amazing insight into how brain computations take place. However, their two-layer perceptrons were limited. For instance, they could not implement the logic gate XOR (i.e., 'one but not both'). An extra layer was added to solve this problem, but it became clear that the Pitts & McCulloch perceptrons could not learn anything requiring more than two layers.

Rumelhart solved this problem with two insights.

First, he implemented a non-linear sigmoid function (approximating a neuronal threshold), which turned out to be essential for the next insight.

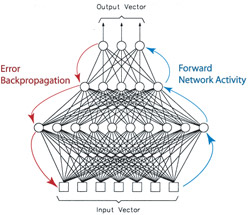

Second, he developed an algorithm called 'backpropagation of error', which allows the output layer to propagate its error back across all the layers such that the error can be corrected in a distributed fashion. See P.L.'s previous post on the topic for further details.

Rumelhart & McClelland used this new learning algorithm to explore how cognition might be implemented in a parallel and distributed fashion in neuron-like units. Many of their insights are documented in the two-volume PDP series.

Unfortunately, the backpropagation of error algorithm is not very biologically plausible. Signals have never been shown to flow backward across synapses in the manner necessary for this algorithm to be implemented in actual neural tissue.

However, O'Reilly (whose thesis advisor was McClelland) expanded on Hinton & McClelland (1988) to implement a biologically plausible version of backpropagation of error. This is called the generalized recirculation algorithm, and is based on the contrastive-Hebbian learning algorithm.

O'Reilly and McClelland view the backpropagating error signal as the difference between the expected outcome and the perceived outcome. Under this interpretation these algorithms are quite general, applying to perception as well as action.

The backprop and generalized recirculation algorithms are described in a clear and detailed manner in Computational Explorations in Cognitive Neuroscience by O'Reilly & Munakata. These algorithms can be explored by downloading the simulations accompanying the book (available for free).

Implication: The mind, largely governed by reward-seeking behavior on a continuum between controlled and automatic processing, is implemented in an electro-chemical organ with distributed and modular function consisting of excitatory and inhibitory neurons communicating via ion-induced action potentials over convergent and divergent synaptic connections altered by timing-dependent correlated activity often driven by expectation errors. The cortex, a part of that organ organized via local competition and composed of functional column units whose spatial dedication determines representational resolution, is composed of many specialized regions involved in perception (e.g., touch: parietal, vision: occipital), action (e.g., frontal), and memory (e.g., short-term: prefrontal, long-term: temporal), which depend on inter-regional communication for functional integration.

[This post is part of a series chronicling history's top brain computation insights (see the first of the series for a detailed description). See the history category archive to see all of the entries thus far.]

-MC

Leave a comment